Thursday, November 7, 2024

Benchmarking Web Crawlers for RAG

A comprehensive benchmark of web crawlers for RAG systems. We tested content extraction quality and speed across 1,500 pages from 15 diverse websites.

When building RAG (Retrieval-Augmented Generation) systems, your crawler is one of your foundations. Get it wrong, and no amount of sophisticated embedding models or retrieval algorithms can compensate for low-quality content. Yet most crawler benchmarks focus on speed and scale while treating content quality as secondary. We set out to change that by creating a benchmark focused on the real-world demands of RAG.

The Challenge

Websites vary dramatically in structure and complexity, and a crawler that performs well on one type can fail badly on another. Therefore, we selected 15 diverse websites across multiple verticals and crawled 100 pages from each - 1,500 pages total. This dataset captures the messy diversity of the modern web.

To make the evaluation meaningful, we measured two core outputs:

• Content Quality

• Crawling Speed

Measuring Content Quality: Introducing CES

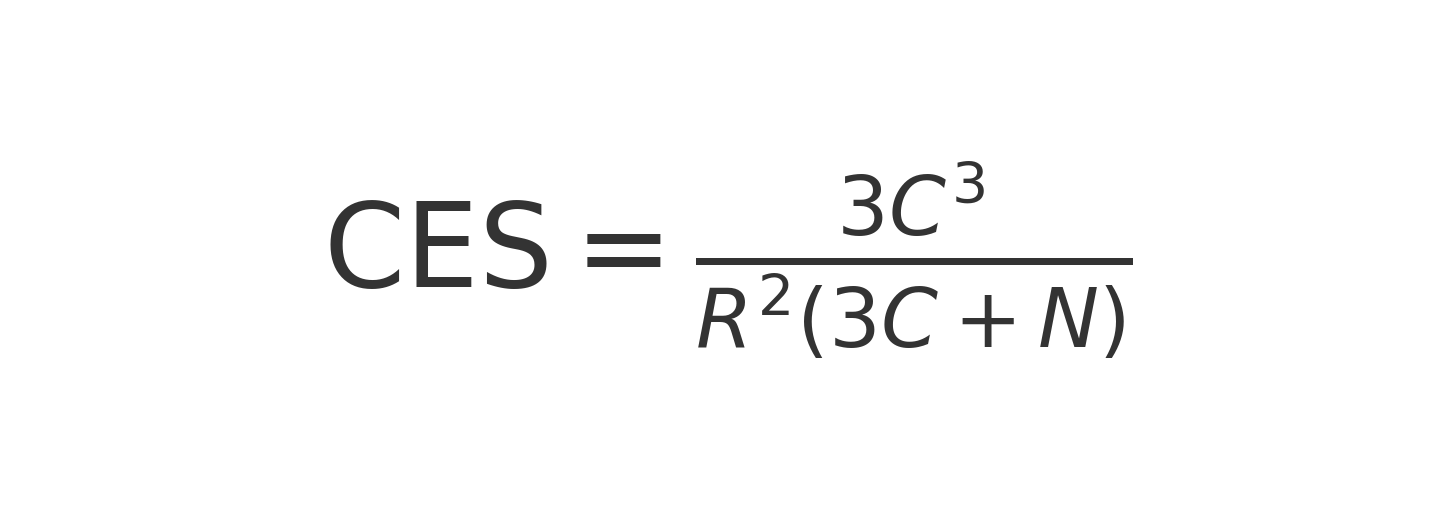

For RAG, content quality is paramount. Missing relevant content is more damaging than including extra noise. To reflect this, we developed a metric specifically for RAG use cases: the Content Extraction Score (CES).

• R = Relevant data expected from a webpage

• C = Correct relevant data extracted

• N = Irrelevant (noise) data extracted

The CES formula applies a penalty for missing relevant information, capturing what matters most for downstream retrieval.

Measuring Crawling Speed

We also measured the time required to extract content from 100 pages per site, across all 15 test sites. This allowed us to evaluate crawler efficiency under widely varying real-world conditions, including JavaScript-heavy pages, dynamic loading, rate limitations, and parallel processing capabilities.

Results: Quality + Speed Benchmarking

Across both dimensions, Olyptik demonstrated stronger performance than major crawling services built for AI. This included speed, handling of JavaScript-heavy environments, and most importantly, content completeness.

Our Honest Opinion

After extensive testing, here's what we learned about each platform:

Crawl4AI

If you're looking for pinpoint configuration with versatile tooling at minimum cost, go for Crawl4ai.

But be aware, the real cost will be time - and lots of it. Tweaking dozens of params for crawling yourself is not the solution. Your customers who want to crawl their sites for RAG will get headaches. We're talking from experience on this.

Apify

Apify works well for targeted, per-site crawling. The only problem is that each website has a completely different "sub-crawler" with a complete new set of configuration - basically a never ending tweaking game.

Olyptik

If the goal is to provide users a simple interface with powerful and fast crawling that can handle a wide variety of website structures - without requiring them to tweak dozens of configurations - Olyptik is built for exactly that.